Jorge BM

Aug 31, 2024

3 Innovative Approaches to AI-Driven Healthcare Implementation

AI applications in healthcare have received a lot of attention in recent years, but implementation issues remain. To address the adoption, use, and integration of technology into healthcare practices and systems, I propose three frameworks that provide valuable insights into the promise and challenges of applying AI in healthcare settings.

What is limiting AI technology innovations in healthcare?

As of today, Artificial Intelligence (AI) is dominating the technology circles in plenty of environments and industries. It seems the term AI needs to be placed in every new technology or simply stamp the seal of “AI” on whatever artefact is created, but, other than the use or misuse of the term, the applications of AI are proving, in many cases, as a new wave of innovation and advancement on technology.

In this case, healthcare, as a general term and sector, is also surfing this AI wave, and like many others, it is also trying to find how to articulate the needs of implementation. Now, way more than before (for sure), there is the right buzz around AI to increase the possibilities for developing new applications. However, the current reality is that, despite the apparent need for global societies to develop solutions to face the increasing ageing population challenge, the cost pressures, the limited resources, and the sustainable delivery of healthcare, many actors still are reluctant to invest largely in AI development for healthcare.

This is the premise I followed to investigate potential solutions for the complex socio-technical system that is healthcare, and the first step was to choose approaches or frameworks that could be used to analyse and execute the development and adoption of AI implementations in this sector. Nevertheless, it was a surprise when I found out that, currently, there are not as many examples as I would have expected, which may be explained due to the “recent” breakthrough of AI, although there are more reasons that I will discuss below. But, no worries, in this post, I will show you three case examples that may be useful in the future research and implementation of AI in healthcare.

Conceptual illustration of complexity in AI innovations in healthcare

Framework 1: Technological Innovation System (TIS)

This model, which is highly recognised amongst scholars, was originally conceived by researchers Carlson and Stankiewicz (1991) [1] using the “technological systems” denomination, but, it was Bergek et al. (2008) and Hekkert et al. (2007) [2,3] who put the focus to the functional patterns that learning flows of knowledge presented for innovation models, rather than just focusing on mere goods and services. Or, in other words, focusing on a dynamic network of actors engaged in the creation, dissemination, and application of technology within a given institutional framework, while operating in a particular economic or industrial domain.

In this sense, following the previous Swedish line of innovation research, a recent article by Petra Apell and Henrik Eriksson titled “Artificial intelligence (AI) healthcare technology innovations: the current state and challenges from a life science industry perspective” [4] used TIS conducting a mixed-research method approach to understand the processes for AI innovations, and their findings were quite promising, not only in terms of their contextual study to enhance the Swedish health system, but also as a chance to export this framework to suitable health systems globally involving numerous partners (Academia, Market, Governance) that could make use of it.

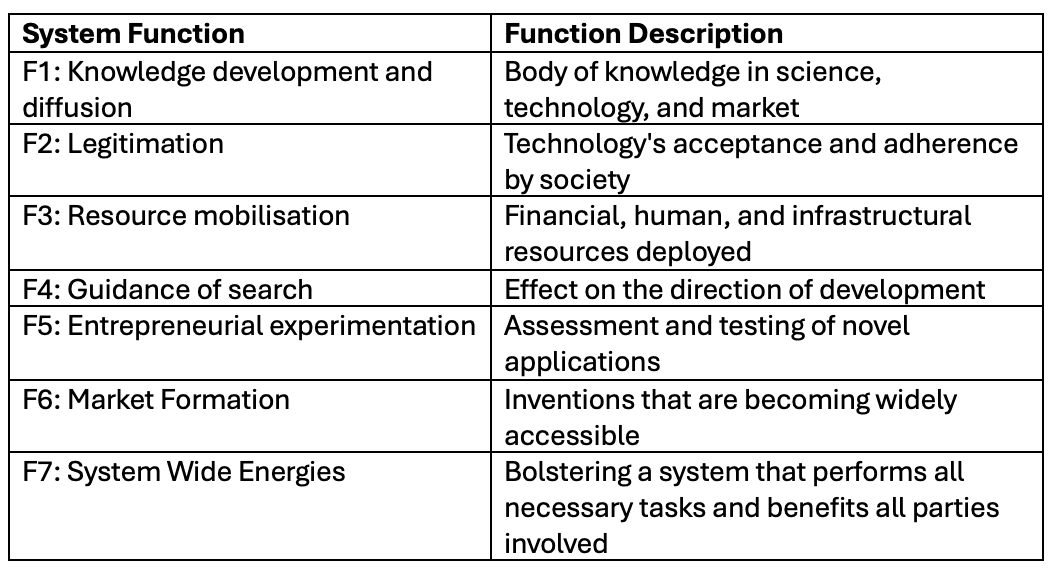

Following a functional approach, the researchers of this study used a combined TIS framework (Table 1) establishing seven functions for analysing and extracting the next conclusions:

(Table 1: Technological Innovation System (TIS) framework)

In this regard, the authors found some constraints and obstacles related to healthcare AI, which provide more insight into the need for a more compelling investment case. First of all, it was identified how not all decision-makers and managers had sufficient knowledge to understand AI innovations, which is opposed to not all AI experts having the business vision to deliver a solid proposal for a large AI investment. This, in my opinion, is a symptom of a breakdown in communication or a lack of connections across, supposedly, disconnected disciplines.

Second, a crucial aspect of any AI solution is data accessibility, and this aspect has always been controversial in the healthcare sector system configuration. This restriction results from a lack of resource mobilization and justification for building data-fetching infrastructure. Restrictive guidelines governing industry-healthcare collaboration frequently lead to issues with data responsibility, privacy, and consent forms for data reuse. However, in practice, accessing massive health datasets is far more difficult than, say, obtaining individual patient data for a business with no government participation. However, these are only safeguards against unauthorized access to patient information, which might lead to problems. For this reason, I concur with the authors' assessment that one possible alternative would be to establish a "shared project portfolio platform" wherein government agencies oversee an interdisciplinary partnership with the aforementioned actors: academia, the market, and governance. This might be a means of expediting the process by granting access to additional resources, which is another way of saying “More data please”.

However, there is a fundamental aspect that sometimes is overlooked, and, from my experience working with AI-based DSS implementation based on multidisciplinary experts, I could identify that F1, through the lack of connectedness between technological knowledge and domain knowledge, is the initial cause of not building solid proposals for AI development in healthcare. One example is also provided in this study when a healthcare professional declared that: “Just because I am knowledgeable in various fields of medicine, does not make me capable of developing innovations relating to my field. We need to collaborate with the companies”.

This is a solid statement on how the nature of AI in healthcare should aim for multidisciplinary collaboration and knowledge sharing, instead of non-connected accounts from isolated experts. I based this conclusion on that innovation and collaboration go hand in hand, so these two concepts need to be properly initiated, communicated and connected to articulate concrete solutions that justify the commercialisation of the AI solution, otherwise, AI in healthcare will remain the domain of startups and small businesses, who are unable to scale their solutions unless they are acquired, merged, or bought by medium-sized or large businesses with potentially larger budgets. This is because, as of right now, decision-makers in large companies still do not see the right return on investment.

“Just because I am knowledgeable in various fields of medicine, does not make me capable of developing innovations relating to my field. We need to collaborate with the companies"

Framework 2: Unified Theory of Acceptance and Use of Technology Model (UTAUT)

Another model that has been used for studies in healthcare AI, although not frequently, has been the Unified Theory of Acceptance and Use of Technology Model. This framework was the result of the study of Venkatesh et al. (2003), where they integrated elements from multiple information technology acceptance research models into one. In simple terms, this framework is intended to “help managers understand the factors that influence acceptance and determine the likelihood of success for the introduction of new technology. This allows them to proactively design interventions (such as marketing campaigns, training programs, and other initiatives) that are aimed at user populations that may be less likely to accept and use new systems” [5].

In this regard, the model incorporates predictor variables called constructs that are subjected to specific moderators (E.g.: Age, gender, experience, profession), which rely on a dependent variable. Following this concept, Cornelissen et al. (2022) used UTAUT in their article “The Drivers of Acceptance of Artificial Intelligence–Powered Care Pathways Among Medical Professionals: Web-Based Survey Study” [6]. More specifically, this study analysed behavioural intention from 67 medical professionals in the Netherlands, which included the next medical and non-medical constructs: “Medical performance expectancy (MEPE)”, “Nonmedical performance expectancy (NMPE)”, “Effort expectancy (EE)”, “Social influence patients (SIPA)”, “Social influence medical (SIME)”, “Facilitating conditions (FC)”, “Perceived trust (PT)”, “Anxiety (AN)”, “Professional identity (PI)” and “Innovativeness (IN)”.

The findings showed that, among non-medical constructs, NMPE, EE, PT, and PI were the most significant predictors of AI-powered care pathway acceptance, while MEPE, a medical construct, was the most significant predictor overall. To be more succinct, nevertheless, I would like to draw attention to the use of UTAUT in this study as a quantitative technique to characterize the impact of healthcare AI's acceptance among medical practitioners. It is advised to see this as the total of quantitative indicators that, in accordance with a mixed-approaches research methodology, might be paired with a qualitative approach to provide a more comprehensive understanding. But in the end, I think this framework's main objective should be to provide some insight into aspects that are relevant to other breakthroughs in healthcare AI. It is also important to note that, despite the fact that "Medical performance expectancy" has the greatest influence on study acceptance, this predictor reflects a need that was previously identified in the preceding model (TIS), which is that the advantages and added value of an AI solution for care pathways still do not provide compelling evidence to support widespread implementation in the healthcare industry.

Conceptual illustration of AI supporting humans for healthcare

Framework 3: Non-adoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS)

Despite having a hard time saying the name of this framework out loud, a large number of articles have cited the study I will use to illustrate this model.

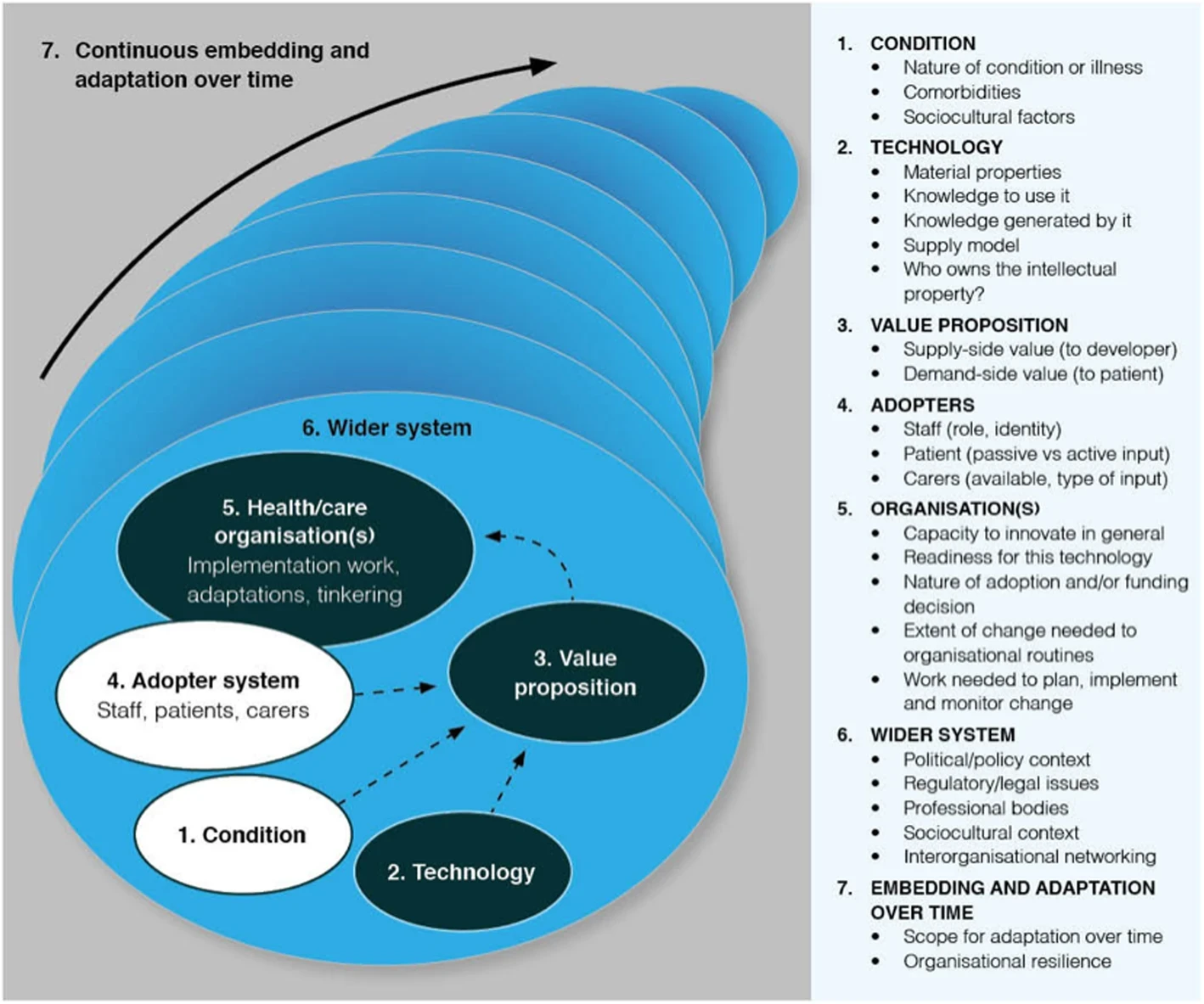

First of all, the NASSS framework was recently created by Greenhalgh et al. (2017) conducting an extensive and thorough study of previous technology implementation frameworks, but with the difference that this was tested through six empirical case studies to help predict and evaluate the success of a technology-supported health or social care program. As the primary components of this approach, the authors constructed a series of domains separated into questions with three levels: easy, complicated, and complex. The domains were divided as follows: "the condition," "the technology," "the value proposition," "the adopter system," "the health or care organization(s)," and "the wider context” (Figure 1). It also includes a seventh domain that considers “embedding and adaption over time”. Also, in words of the authors, the framework was designed to be used intuitively to lead talks and ideation, rather than a checklist [7].

(Figure 1: Non-adoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) [8] )

As mentioned previously, current implementations of AI in healthcare are facing a wide range of challenges from multiple perspectives, such as sociocultural, economic, political or systematic. This premise also was considered by Shaw et al, (2019) in their article called “Artificial Intelligence and the Implementation Challenge”, in which the NASS framework was applied to scrutinise how AI, and more specifically, the subfield of Machine Learning (ML), addressed two major applications of this technology being “decision support” and “automation” use cases divided into three tasks: clinical, operational and epidemiological [8].

In this regard, this paper highlights two key problems that health systems may run across while implementing ML solutions. First, "the Role of Corporations" points out a limitation regarding the massive volumes of data that big businesses require. This raises concerns about data collection, regulation, and protection in political jurisdictions that lag far behind in the healthcare system. Secondly, "the Changing Nature of AI-enabled Healthcare" examines how these applications affect a complex system that involves a multi-institutional and multifaceted system. Put another way, the authors contend that because the healthcare system is so complicated from an institutional, legal, cultural, regulatory, and moral standpoint, implementing AI still needs to develop particular strategies based on the tasks involved. In order to address these issues, I argue that the implementation science community is facing ML application from a broad standpoint, so the chances are inclined to be doubtful when making such a significant investment; however, the impact of the implementation is lessened and becomes more manageable when the implementation task is narrowed down. Dream large, but evidently not when it comes to healthcare, since this industry seems most comfortable with small, targeted adjustments.

Resources

1. Carlsson B, Stankiewicz R. On the nature, function and composition of technological systems. J Evol Econ 1991 Jun;1(2):93–118. doi: 10.1007/BF01224915

2. Bergek A, Jacobsson S, Carlsson B, Lindmark S, Rickne A. Analyzing the functional dynamics of technological innovation systems: A scheme of analysis. Research Policy 2008 Apr;37(3):407–429. doi: 10.1016/j.respol.2007.12.003

3. Hekkert MP, Suurs RAA, Negro SO, Kuhlmann S, Smits REHM. Functions of innovation systems: A new approach for analysing technological change. Technological Forecasting and Social Change 2007 May;74(4):413–432. doi: 10.1016/j.techfore.2006.03.002

4. Apell P, Eriksson H. Artificial intelligence (AI) healthcare technology innovations: the current state and challenges from a life science industry perspective. Technology Analysis & Strategic Management 2023 Feb 1;35(2):179–193. doi: 10.1080/09537325.2021.1971188

5. Venkatesh, Morris, Davis, Davis. User Acceptance of Information Technology: Toward a Unified View. MIS Quarterly 2003;27(3):425. doi: 10.2307/30036540

6. Cornelissen L, Egher C, Van Beek V, Williamson L, Hommes D. The Drivers of Acceptance of Artificial Intelligence–Powered Care Pathways Among Medical Professionals: Web-Based Survey Study. JMIR Form Res 2022 Jun 21;6(6):e33368. doi: 10.2196/33368

7. Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A’Court C, Hinder S, Fahy N, Procter R, Shaw S. Beyond Adoption: A New Framework for Theorizing and Evaluating Nonadoption, Abandonment, and Challenges to the Scale-Up, Spread, and Sustainability of Health and Care Technologies. J Med Internet Res 2017 Nov 1;19(11):e367. doi: 10.2196/jmir.8775

8. Shaw J, Rudzicz F, Jamieson T, Goldfarb A. Artificial Intelligence and the Implementation Challenge. J Med Internet Res 2019 Jul 10;21(7):e13659. doi: 10.2196/13659